Best Practices in Prompt Engineering

This blog post is a super short guide to prompt engineering. The discipline gains importance for developers but also everybody who wants to use ChatGPT or other tools to be more efficient.

What is Prompt Engineering?

This is a method to work efficiently with an LLM - simply said:

1) Designing, developing and optimizing "prompts", respectively requests that are send to a large language model

2) Identifying existing vulnerablilities in a model

3) Making models are reliable, efficient (less time-consuming when calling the APIs) and safe (less hallucinations)

How does Prompt Engineering work?

Ususally a prompt engineer interact with the LLM via an API or directly. Thereby the prompt engineer can configure a few parameters to get different results for his prompts.

One of the most important topics is the temperature: the lower the temperature, the more deterministic is the result. If you increase the temperature, the more diverse and creative answers you will get. So if you build a Q&A chatbot, you might want to use a low temperature to be more fact-based. Looking at a story or content creation, you would rather increase the temperature value.

What are the principles of good prompts?

1) Good prompts are structured: use delimiters (like quotes), ask for structured output like a Json, png, and check if conditions are satisfied and assumptions are required to do the task.

2) Give the "model time to think": drill down your task you want to get ChatGPT or another llm to be completed into several structured subtasks. Like with humans you can extrapolate the pain point into several steps to solve it. Do the same with the machine

What is Zero-Shot or Few-Shot Prompting?

Few-shot and zero.shot prompts enable the language models to learn tasks given a one or few demonstrations how to do it.

Zero Shot Prompting Example:

Your task is to answer in a consistent way:

<Question>

<Answer>

Few Shot Prompting Example:

To classify a sentence you can use few shot prompting:

<I wish I could have gone on that trip> // sad

<Yey! I will be married soon!> // happy

<hm, I don't feel well> // sad

<So glad that you are here> // ?

And the llm would basically learn based on this structure what to predict in the last sentence. Thus "happy".

What shall I do when I do not know how to prompt ChatGPT?

You can always ask ChatGPT itself to rephrase your question (e.g. "from now on optimize my question and ask me if I should use it?") or optimize your prompt by using tools that help you to write best prompts. A free one is: https://chat.openai.com/share/c1b9f609-e3ac-497a-bf77-a56e1bfd5fb6.

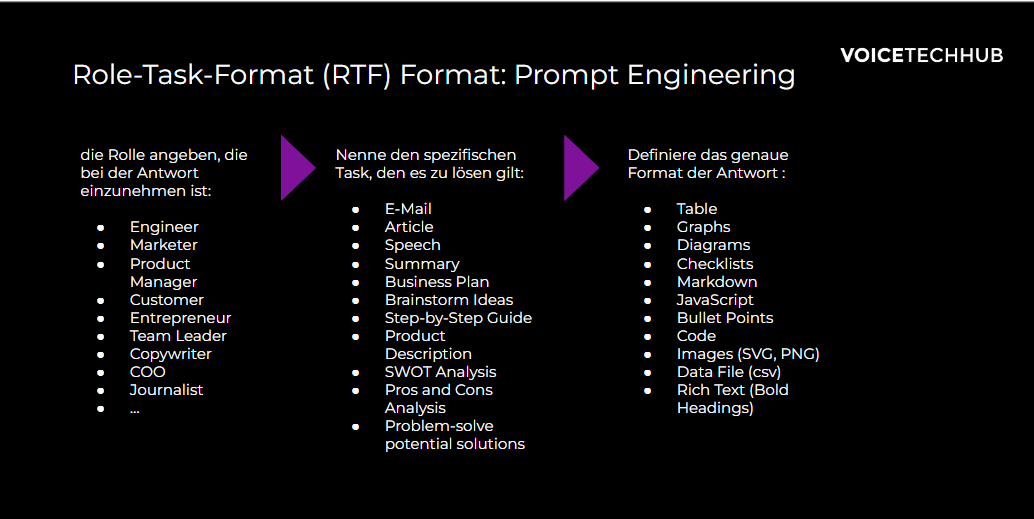

Role Task Format (RTF) for Prompt Engineering is a good starter to learn how to ask ChatGPT. An example is: As an analyst I need to create the most relevant KPIs in a table about topic {XYZ}.

Need support with your Generative Ai Strategy and Implementation?

🚀 AI Strategy, business and tech support

🚀 ChatGPT, Generative AI & Conversational AI (Chatbot)

🚀 Support with AI product development

🚀 AI Tools and Automation